Laura Ibáñez

ZEUS

23 SEPT 2023

Laura Ibáñez has a degree in Electronic Engineering (UPC) and in Music (ESMUC). She is currently studying a Master’s Degree in Artificial Intelligence Research (UNED) and specialises in the use of Deep Learning techniques for music generation applications. She has worked for two years at the startup Pixtunes as a Machine Learning engineer for music applications and currently works as a freelance for the company AudioShake, which specialises in the separation of music sources using artificial intelligence.

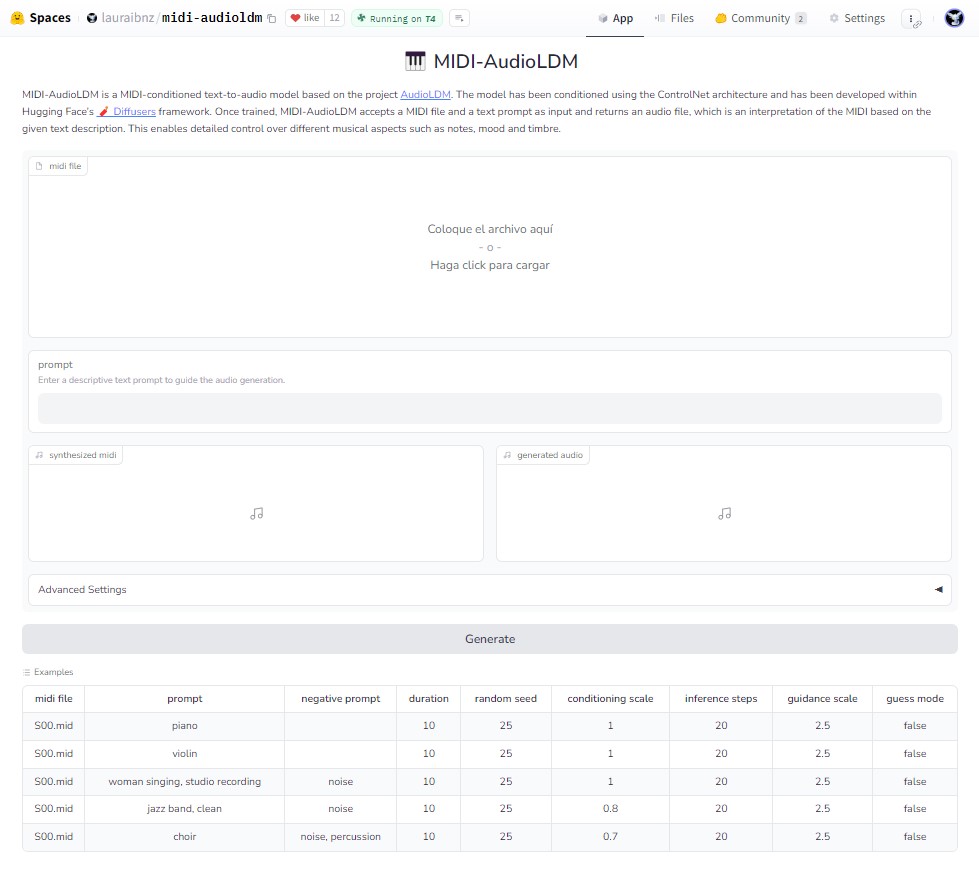

Laura Ibáñez will describe the state of the art of text-to-audio models for music generation, which appear after the great success of text-to-image models such as DALL-E or Stable Diffusion. He will present the MIDI-AudioLDM project, developed as a final project for the Master’s Degree in Artificial Intelligence Research at the UNED. MIDI-AudioLDM is a MIDI-conditioned text-to-audio model based on the AudioLDM project and developed within the Diffusers library of Hugging Face.

Finally, a small workshop will be held in which different Google Colab notebooks and Hugging Face spaces will be suggested to test these models.

huggingface.co/spaces/lauraibnz/midi-audioldm